The End of Theory

Thoughts on AI's promise of universal computation

Alessandro Volta invented the battery before anyone knew what electricity was. In a two-part letter to the Royal British Society in 1800, Volta effectively disproved the shared ‘wisdom’ of the day - that electricity is not an intrinsic property of living beings, but can be generated chemically.

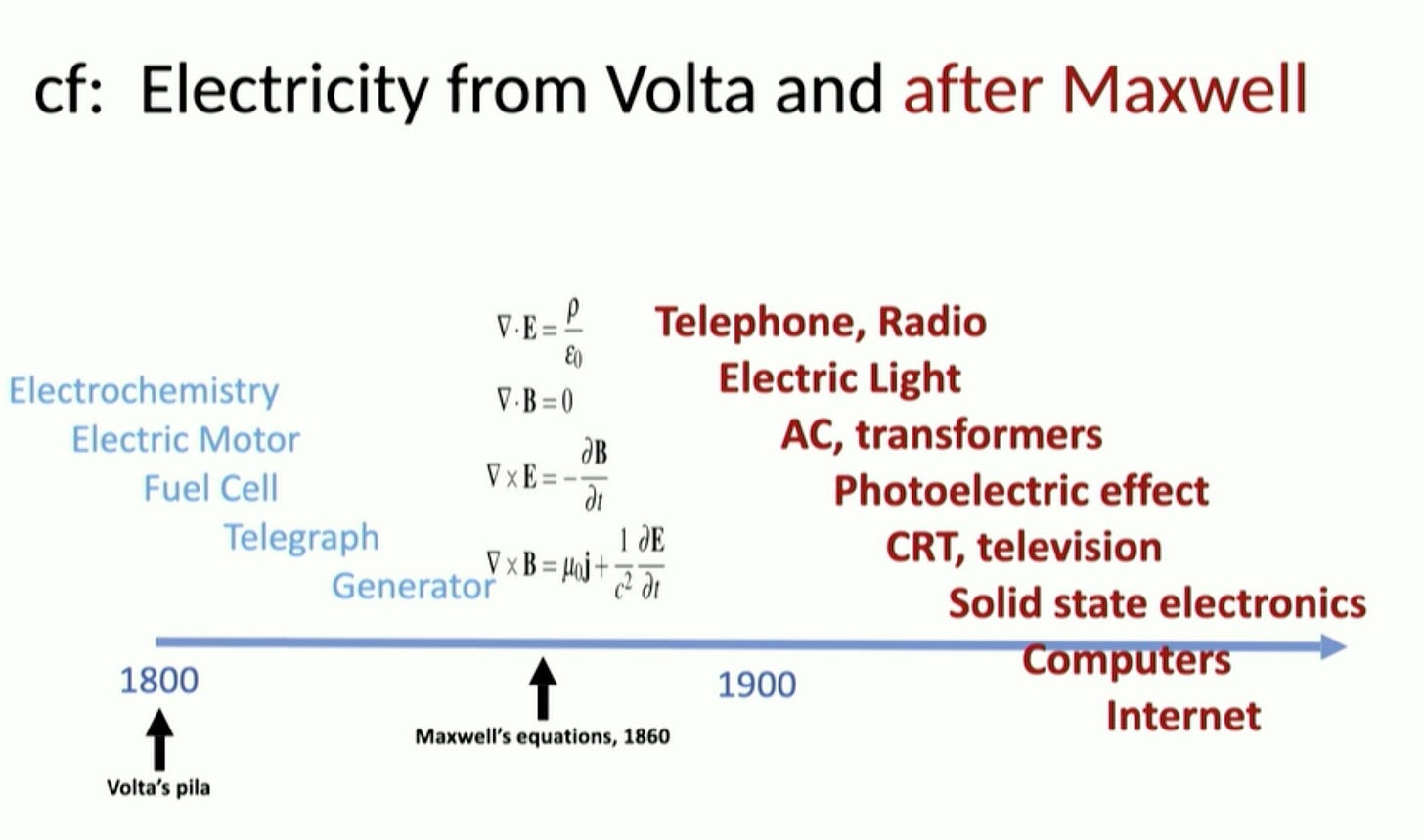

Other inventions quickly followed - The Electric Motor, The Transformer, and The Generator, to name a few.

The astounding implications of this invention cannot be overlooked. In harnessing the energy latent in earth materials, Volta effectively ushered in the modern age. The Industrial Revolution would start concomitantly, allowing energy to be generated, transferred, and stored seamlessly for the next two hundred years.

Second, it demonstrated (again) that as far as the accidental or deliberate history of scientific progress goes, Theory is preceded by the machine.

It would be sixty years before James Clerk Maxwell would publish A Dynamical Theory of the Electromagnetic Field in 1865, building the fundamental principles of how electricity and magnetism co-exist, interact, what light means, and more. To this day, The Maxwell Equations are considered to be one of the most beautiful sets of equations ever written. Ask physicists what it means to their field, and they’ll probably cough up while giving you a lecture. It’s really emotional for them. Tears are guaranteed.

But note what’s important here - To show something is possible (not plausible), Volta did not require theory.

Tomaso Poggio at MIT, professor, and legend, describes this conundrum perfectly.

Note the inflection point in 1860. The first battery appeared sixty years prior, but within the next sixty years, humans would have the television. Theory → Progress exponentiation.

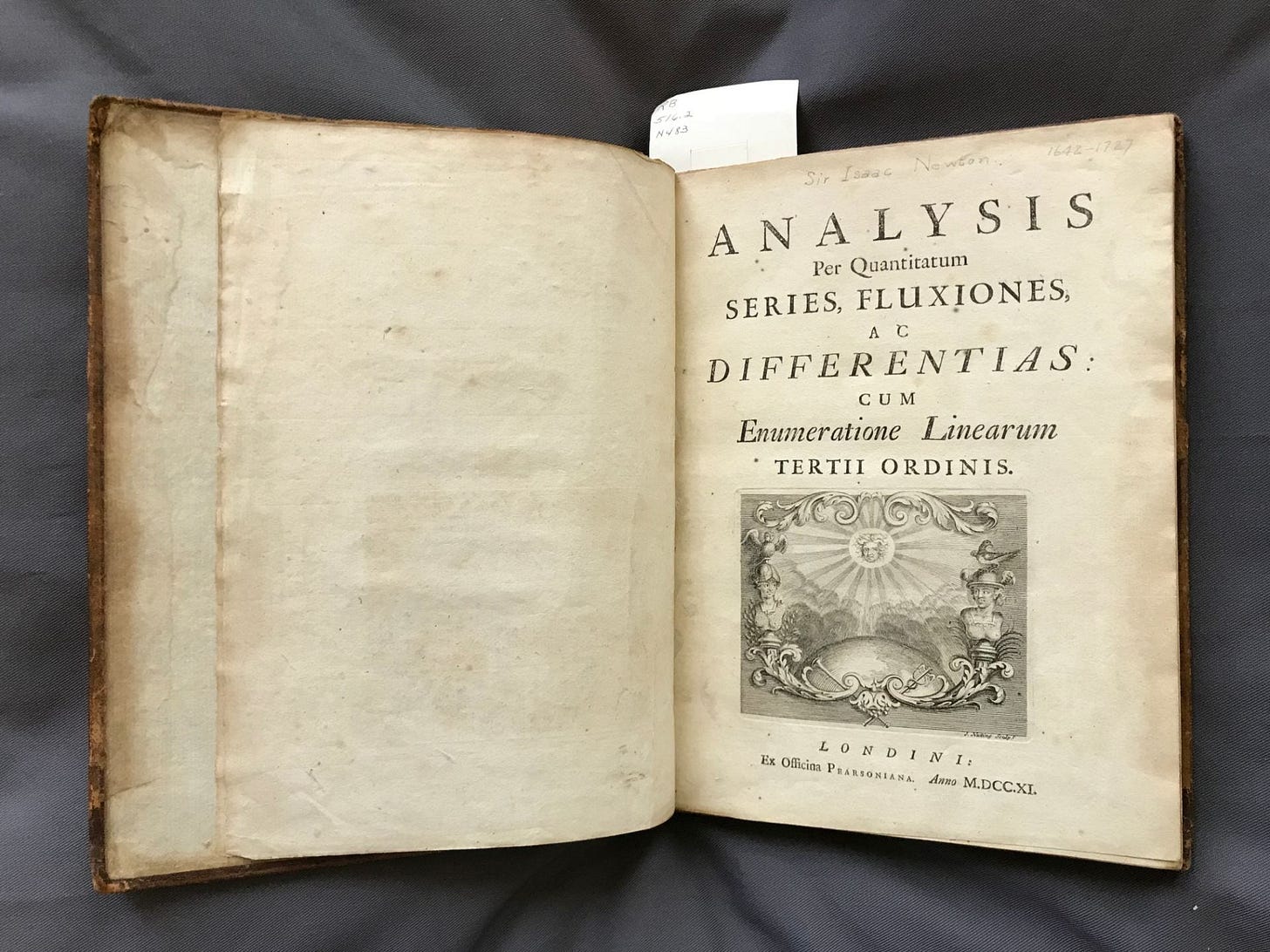

Isaac Newton’s attempts at describing objects in free fall, planetary ellipses, or the physics of motion, were not informed by theoretical contributions to the ideas of limits and continuity at all. In fact, the first developments of calculus (or, as we know it in the modern form, anyway) comprised the idea of approximations using the infinitesimal. To calculate the area under the curve, he would use triangles of infinitesimally increasing x, and sum them all up using the Binomial Theorem (which, incidentally, he developed as well), leading to an approximation of the total area. This is astounding because it was neither rigorous nor formal. In fact, the version of calculus taught in schools and universities today mirror theoretical developments almost two hundred years after Newton! The next time school starts with a limits and continuity class before differentiation (and worse, integration), readers are encouraged to note the historical inaccuracy at play - The epsilon-delta definition of continuity (in fact, the concept of continuous functions) was not explored by Newton or any of his contemporaries. In fact, it is unlikely they even hypothesized such theoretical contributions. It was developed almost two hundred years after the birth of modern calculus.

But what does the history of electricity, or calculus, have to do with AI? Well, it is to demonstrate that science’s epistemology develops in a rather counter-intuitive fashion. First, we show something that works. Then, we figure out why it works. Deep Learning is no exception (although a lot of people will take exception to me considering Deep Learning a science, per se).

This is not to say that theory is unimportant - but let theory not be a hindrance to progress. Let theory be the tool on which progress exponentiates. And this, certainly, is true.

The advent of Deep Learning takes us back to a theorem assuming more and more importance as the years go by - The Universal Approximation Theorem (UAT).

To put it simply, a neural network can in principle approximate any real, computable function. To recall what real, computable functions are, a previous post would be useful. But it’s not really necessary - I’d wager that you could replace ‘real, computable’ with ‘anything practically useful (APU)’. But ‘anything practically useful’ does not equate to ‘anything practically tractable’. More on this later.

Deep Learning aims to estimate a function for a task for which the true function is unknown. It hopes that the function it learns closely resembles the true function. Simply put, if you were given a bunch of images to classify, you assume that there is a function out there that describes perfectly this bunch of images. Your task is to estimate this unknown function.

The UAT promises that for any task that can be formulated as a mapping task (An input goes through an APU function, and produces an output), you could in principle approximate a satisfactory solution using neural networks.

But what does that mean? Well, it depends on what you want it to mean. If you believe that every time you look at your dog and recognize that: a) It’s a dog and b) It’s your dog, your visual cortex also approximates an unknown function (a function that describes perfectly what a dog is), then you are applying UAT. If you, instead believe that this is nonsense and that the visual cortex knows inherently what a dog is (meaning that unknown function is inherently encoded in your neurons), then you aren’t applying UAT. You are applying some other form of intelligence.

To prevent things from getting more convoluted, here’s a simple question to ask - Does Deep Learning learn things in the same way humans do?

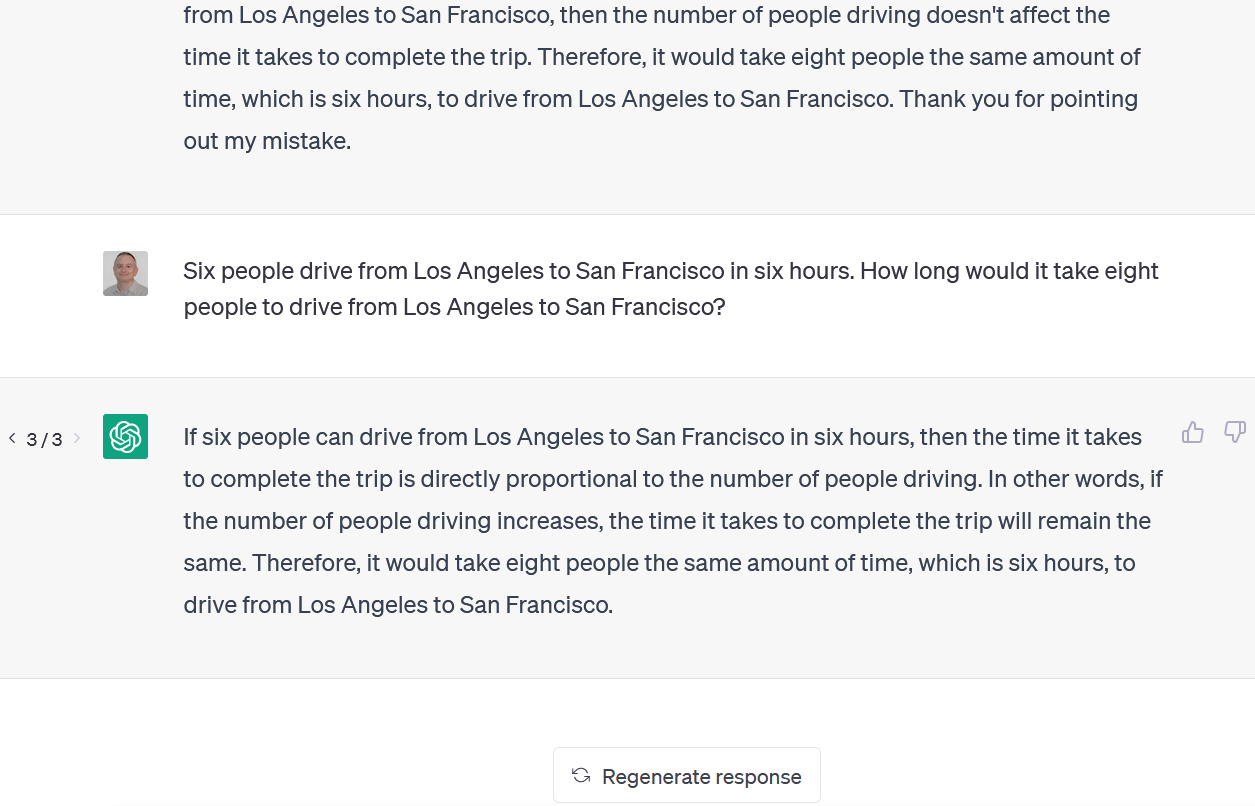

This is a very nuanced gotcha question. We should not jump to conclusions here - Statements such as ‘Well, clearly deep learning doesn’t learn anything logical since there are examples of Stable Diffusion generating humans with three legs’ or ‘ChatGPT doesn’t know what concepts are, it generates logically inconsistent text’ are irrelevant to this question.

Why are they irrelevant?

Well, because they fall victim to scaling arguments, as described by neuroscientist Erik Hoel. GPT-4 is already much better than any of its predecessors with about 1 trillion parameters. What happens with 10 trillion? 20 trillion?

As those ‘three-leg humans’ and ‘illogical text’ glitches slowly erode away, the question would still remain, and be more relevant than ever - Imagine Stable Diffusion does indeed generate lifelike images with no inconsistencies and does generate long-form text with no inconsistencies either. Would you be convinced then of its intelligence, or that it has indeed learned concepts in the way humans have? I suspect not.

In short, if statistical correlations are so fine-tuned, so ‘perfect’, that AI’s generations harbor no logical inconsistencies, how would you demonstrate that AI hasn’t learned concepts?

It is impossible. Therefore, the question ‘Does Deep Learning learn in the same way humans do?’ can be reduced to another form:

Is logic captured completely by statistics?

And this, my friends, is unknown.

There are ways out of this conundrum, however. Neuroscience, for example, has a lot to say about Deep Learning. In fact, in recent years, a whole field has popped up - Biologically plausible deep learning.

In essence, neuroscientists are debating whether backpropagation, the core learning mechanism in deep learning, can be resolved sufficiently with a biological interpretation. As of today, and as far as my reading goes, this is open.

But the consequences of this question are immense - I started this essay with a key difference between ‘anything practically useful’ and ‘anything practically tractable’. If human learning is similar to backpropagation, we have to find ways to make learning tractable in neural networks. We have to find ways to store information efficiently, develop faster access to memory, and retrieve data relevant to the task. A computation is not useful if it takes a thousand years to generate a single output. In a weird abstract sense, this is the curse of dimensionality. And this is where theory will be useful. But note how, to get to this point in our history, where chatGPT has upended our understanding of machine understanding, GPT-4 has rendered standardized exams meaningless, and AI is an ongoing point of conflict in the SAG-Aftra strike (all in less than a year), we did not need theory.

The end of theory is also the beginning of theory.

What will be the Maxwell’s Equations moment for Deep Learning?