The Myth of Hypercomputation

What computers can and cannot do, what they can and cannot conceivably do.

Martin Davis is Professor Emeritus at NYU, and famous for his contributions to

Hilbert’s Tenth problem. This 2004 paper discusses his critique of the

hypercomputation movement, conceived by connectionist academics to investigate

the limits of computations, and whether those limits can be broken.

Hypercomputation refers to the movement that aims to conceive of physical

systems to compute uncomputable functions. To understand this further, we

need to clarify the notion of “computability” first.

For a large period in history, dating back to at least the Islamic Golden Age, var-

ious scholars have been interested in representing solutions to different problems

as a mechanistic, step by step approach. These problems can be varied: Calcu-

lating the sum of two arbitrary numbers, calculating the product of numbers,

finding the greatest common divisors, etc.

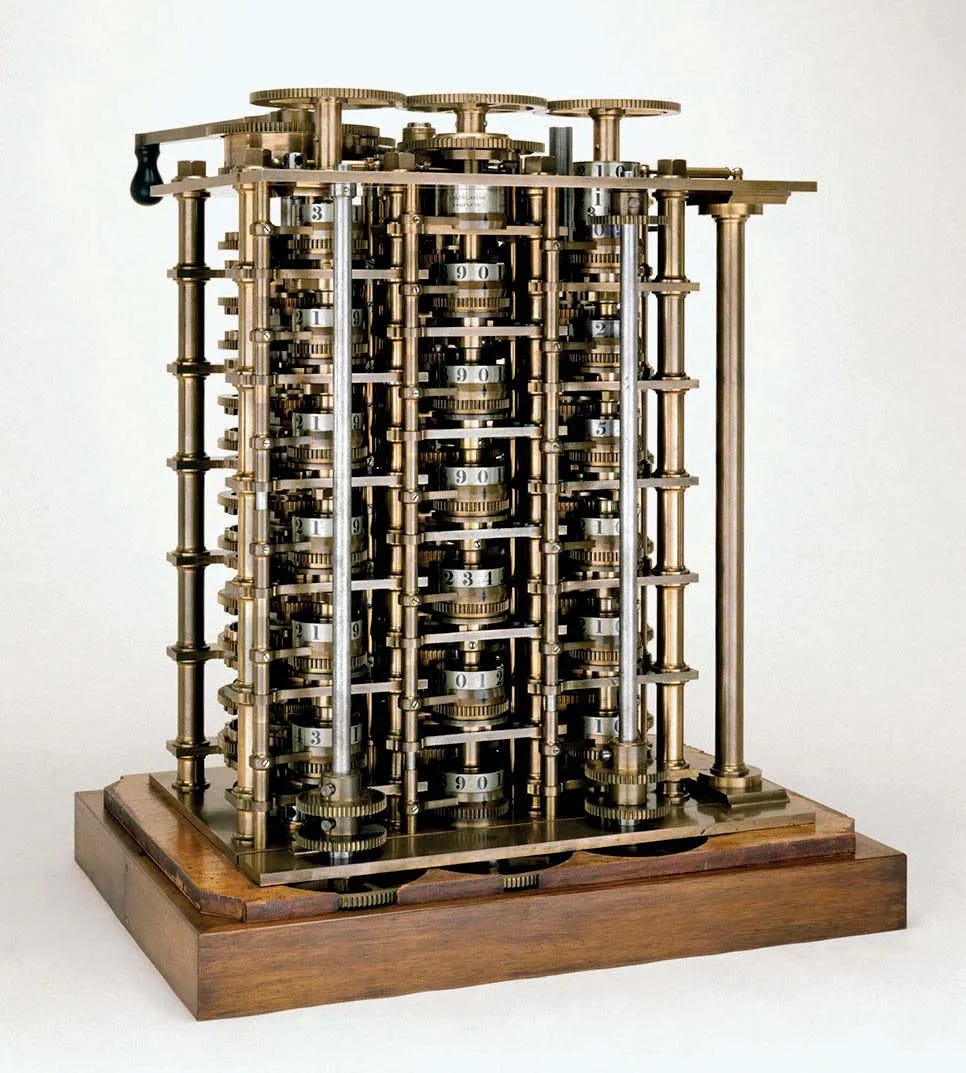

Babbage’s Difference Engine (1820s) to compute polynomials: Most useful functions in existence can be approximated via polynomialsWhile such algorithms are as old as civilisation itself, the notion of formal-

ising them started with the Persian mathematician Al Khwarizmi, from whom

the word “Algorithm” is derived [2]. While constructing physical devices to

execute such algorithms have been around since the times of Charles Babbage

[1], a solid theoretical foundation was still absent for centuries. By a theoretical

foundation, we mean specifically the following questions:

1. Do such mechanistic, step by step algorithms exist for all sort of problems?

2. By extension, what sort of problems can be solved? Are there different

classes of such problems? Are there degrees of solvability?

3. What would be an abstract representation of a physical device that could

in principle solve these problems? How would it function?

These three core questions were formalised further and preliminarily an-

swered in the 1930s, (millennia after the first algorithms on the real and rational

numbers were developed), owing to the work, primarily, of Kurt Gödel, Alonzo

Church, and Alan Turing.

While the specifics of their works (specifically Gödel’s) harboured subtle differences

between each other, in essence the conclusions of these works were the same. In

1931, Gödel published his seminal work on Incompleteness. To put it tersely,

he proved that any consistent (a system that entails no contradictions) for-

mal axiomatic system (like Peano arithmetic, for instance) contained logically

true statements that could not be proved from deductions within that system.

Additionally, he also proved the impossibility of this system proving its own

consistency.

Gödel’s work was closely followed by Church and Turing, who inde-

pendently tackled the solvability of the Entscheidungsproblem (The Halting

Problem), and proved it to be unsolvable.

In Turing’s paper [5] on the halting problem, he first introduced the abstract

computational model of the Turing machine - An abstract model that manipu-

lates symbols on an infinite tape, and updates its state according to a table of

rules. It can be shown that for any algorithm that runs on modern computers,

a corresponding Turing machine can be constructed to solve that problem [4].

In this vein, a “computable” number (or a function) is therefore any num-

ber or function that can be enumerated by a Turing machine via finite means.

For a real number, computability thus translates to an enumeration of the said

number to a desired finite precision of digits.

Hypercomputation refers to the movement that aims to build physical devices

that can represent uncomputable numbers. It is this idea that Davis critiques

in his paper.

Firstly, it must be pointed out that while the Turing machine admits infinite

space and memory, physical computers do not. Every calculation that occurs

on these systems represent the reals to some degree of approximation. Addi-

tionally, the standard reals like pi and e are both computable. To say that one

can build a physical system that ventures beyond Turing machines is to say that

one can build a physical device that handles uncomputable (infinite precision)

numbers (or functions).

Certain devices [3], that have apparently solved this problem are shown by

Davis to be incorrect, owing to a circular reasoning employed by the design-

ers wherein if non computable inputs are permitted to the system, non com-

putable outputs are attainable. He shows that, for one of these models, the claim

that this model had attained an output previously shown to be non-attainable

(meaning a Turing machine would not halt on the set of inputs provided), thus

effectively meant that the model could compute things previously proven to

be uncomputable on a Turing machine, is wrong, because the inputs provided

to this model were indeed computable, and that the only way this model in

question could attain non-computable outputs is if it were fed non-computable

inputs, which is an obvious statement, and thus does not in any way go beyond

the claims Turing computability.

The core issue is, if it were available to compute non-computable inputs and

feed them into an abstract machine (say an oracle that tells us whether a prob-

lem is solvable), then the problem would trivially be solved. The problem there-

fore remains: Can we build physical devices that can compute un-computabe

functions (as previously proven)? Davis shows that the devices that claimed to

do this were in fact begging the question.

Secondly, the realisation of such a “revolutionary” physical theory that leads to non

computable numbers is inherently contradictory, as every new theory is

subject to experimental evidence constrained by instrument precision. If such a

non computable number of infinite precision could truly be generated by such a

theory, how would it every be verified by a physical device of inevitably discrete

means (limited space, memory)?

Thirdly, the hypercomputation movement fails to take into account compu-

tational complexity classes of algorithms. Assuming such a device existed, how

long does it take to generate a response? How does this runtime scale with the

size of the input query? A response to a query really isn’t useful if the response

time takes the timespan of the universe. While quantum computers may help

in this regard, Quantum computers can also be extended to the paradigm of

Turing computability [6], and certainly do not venture beyond this

limit as such.

In conclusion, the hypercomputation movement aims to build a revolutionary

physical theory that will not be physically possible to verify (if at all it were

true), does not take into account practical concerns of algorithmic complexity,

and has not been able to resolve the inherent issue of generating a program to

compute uncomputable inputs.

Turing’s legacy is safe.

References

[1] Jack Copeland. The Modern History of Computing. url: https://plato.

stanford.edu/entries/computing-history/.

[2] Ali Abdullah Daffa. The Muslim Contribution to Mathematics. Croom

Helm, 1977. isbn: 9780856644641.

[3] H. Siegelmann. “Computation Beyond the Turing Limit”. In: Science 268

(1995), pp. 545–548.

[4] Michael Sipser. Introduction to the Theory of Computation. PWS Publish-

ing, 1997. isbn: 053494728X.

[5] Alan M. Turing. “On Computable Numbers, with an Application to the

Entscheidungsproblem”. In: Proceedings of the London Mathematical So-

ciety 2.42 (1936), pp. 230–265. url: http://www.cs.helsinki.fi/u/

gionis/cc05/OnComputableNumbers.pdf.

[6] https://en.wikipedia.org/wiki/Church–Turing–Deutsch_principle